Seven Unique Factors of First-Cut Alfalfa

by Mike Rankin, University of Wisconsin Extension

The uniqueness of alfalfa spring growth has been a core factor for many discussions, research trials, media articles, debates, and flat-out arguments among forage brethren. Generally, the conversation centers around when to cut and the best method to determine forage quality of the maturing alfalfa plant.

Spring 2013 may offer additional unique aspects of making first-cut harvest timing decisions as many fields experienced the added 2012 stresses of drought, insect pressure, and additional harvests.

Farmers and scientists have been able to solve a plethora of agricultural problems over the years, but the yield-quality-persistence trade-off that comes with producing alfalfa has only partially been softened with improved genetics. As such, recommendations for first-cut harvest strategies become a litany of options depending on the feed needs of the individual farm and the operator’s willingness to risk downside on the competing factors of forage yield, quality, and stand persistence.

Here are seven factors that make first-cut alfalfa different from every other harvest of the season.

1. Growing Environment

A discussion of first-cut forage quality has to begin with the growing environment. At no other time during the growing season is there the likelihood for such a wide range of environmental conditions: cool and wet, cool and dry, hot and wet, or hot and dry. This potential range in growing environment has a profound impact on both alfalfa growth and forage quality from year to year.

Making accurate estimates of harvested forage quality (bales or haylage) is an impossible task. Why then should it be assumed that estimating the quality of standing alfalfa is any easier? It’s not; especially for first-cutting. A primary reason why no single criteria can predict optimum cutting time is that the environment plays a key role in both alfalfa growth and forage quality. Moisture stress and/or cool temperatures result in a slower decline of forage quality and generally slower growth. Conversely, high temperatures result in a more rapid decline in digestibility and increased growth, assuming adequate moisture is present.

These plant responses to environmental conditions are interactive and the primary cause for the plethora of possible growth and forage quality scenarios encountered in the spring. Keeping these relationships in mind will help as forage quality is monitored this spring. This is what comprises the “art” of haymaking.

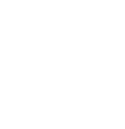

2. Fiber Digestibility: First to Worst

Fiber digestibility usually takes a wild ride during the course of spring alfalfa growth. First-cutting NDFD can be, and often is higher than any other cutting of the season. This certainly has proven to be the case in Wisconsin Alfalfa Yield and Persistence project fields (Figure 1). During the six years of the project, first-cut NDFD averaged 49.8%, about 3.5 percentage units more than second- or fourth-cut and 6.5 units more than third-cut. Ironically, the average NDF% for first-cut and fourth-cut in these same fields was exactly the same (data not shown). Even small increases in fiber digestibility can make for large differences in milk production.

Fiber digestibility usually takes a wild ride during the course of spring alfalfa growth. First-cutting NDFD can be, and often is higher than any other cutting of the season. This certainly has proven to be the case in Wisconsin Alfalfa Yield and Persistence project fields (Figure 1). During the six years of the project, first-cut NDFD averaged 49.8%, about 3.5 percentage units more than second- or fourth-cut and 6.5 units more than third-cut. Ironically, the average NDF% for first-cut and fourth-cut in these same fields was exactly the same (data not shown). Even small increases in fiber digestibility can make for large differences in milk production.

3. A Steep, Downhill Slope

Though first-cutting offers the opportunity for harvesting the highest digestible fiber of the growing season, forage quality declines at a faster rate for first-cut compared to subsequent cuttings. This presents the possibility of also harvesting large quantities of very poor, low digestible forage once flowering stages are reached. Hence, a timely first-cut is essential if high forage quality is the primary objective. To achieve a target forage quality, the spring harvest window is often narrower compared to subsequent growth cycles. As noted earlier, this downhill ride in declining forage quality is accelerated by warm temperatures. It also becomes more dramatic if grass is present in the stand.

4. Need & Ability to Estimate Forage Quality

Given the first three factors, the need to estimate first-cut forage quality as it stands “on the hoof” becomes a necessity if forage quality train wrecks are to be avoided. Early attempts to gauge forage quality solely by either maturity stage or calendar date proved inconsistent at best, or a total failure at worst. Spring growing conditions are simply too variable.

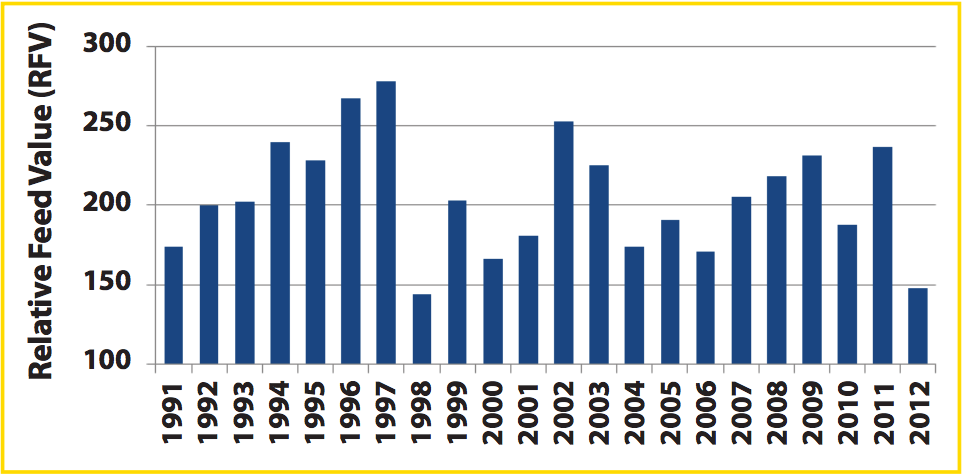

The problem with using calendar date as a harvest timing criteria is documented in Figure 2. Using alfalfa scissors-cutting data, relative feed value (RFV) has been tracked at specific dates in Fond du Lac County, WI, since 1991. The graph displays RFV for May 20. The range in RFV for this date is from 144 (1998) to 278 (1997). Similarly, large differences in RFV are also seen from year to year at the same visual maturity stage.

The problem with using calendar date as a harvest timing criteria is documented in Figure 2. Using alfalfa scissors-cutting data, relative feed value (RFV) has been tracked at specific dates in Fond du Lac County, WI, since 1991. The graph displays RFV for May 20. The range in RFV for this date is from 144 (1998) to 278 (1997). Similarly, large differences in RFV are also seen from year to year at the same visual maturity stage.

With the realization that a better forage quality estimator was needed for first-cut, methods such as scissors-cutting (sampling fresh forage for lab analysis), Predictive Equations for Alfalfa Quality (PEAQ), and accumulated growing degree units (base 41) have been developed and used in the Midwest. Each method has advantages and disadvantages, but all have been proven reliable enough to prevent gross miscalculations of first-cut forage quality from year to year.

The relative ease of use with these methods often results in using one to verify the other. There are many good websites and Internet papers available that discuss all of these methods in detail; some even compare the various methods against one another. The important point is implementing one of these methods is better than doing nothing.

5. Yield Potential

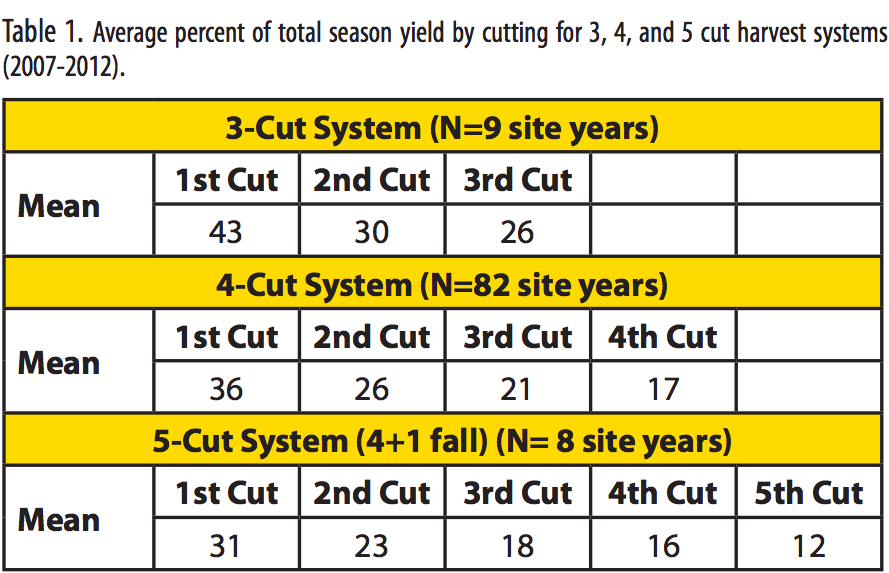

It is never just about forage quality. First-cutting also has some unique yield characteristics. Spring alfalfa growth almost always provides the highest percentage of total-season DM yield. Data from the Wisconsin Alfalfa Yield and Persistence project is presented in Table 1. The mean percentage of total-season DM yield ranged from 43 for a 3-cut system to 31 for a 5-cut system, but in all cases was highest for the first-cutting. The economic consequences of making a first-cut timing mistake are higher than for any other cutting because there is usually more forage than any other cutting.

It is never just about forage quality. First-cutting also has some unique yield characteristics. Spring alfalfa growth almost always provides the highest percentage of total-season DM yield. Data from the Wisconsin Alfalfa Yield and Persistence project is presented in Table 1. The mean percentage of total-season DM yield ranged from 43 for a 3-cut system to 31 for a 5-cut system, but in all cases was highest for the first-cutting. The economic consequences of making a first-cut timing mistake are higher than for any other cutting because there is usually more forage than any other cutting.

Similar to forage quality, changes in initial spring growth occur more rapidly for yield than for summer and fall growth cycles. Also like forage quality, growing environment dictates the rate of DM accumulation per acre. An average figure is reported to be about 100 lbs/ac/day during the late-vegetative to late-bud stages. If air temperatures are warm, it will be greater than 100 lbs; if cool, less than 100 lbs.

At this point, the 800 pound gorilla in the room needs addressing. Under normal growing conditions, a 5-day delay in first-cut alfalfa harvest results in a gain of about 0.25 tons DM/ac, but a decline in RFV (or perhaps relative forage quality (RFQ)) of about 20 points. Deal or no deal? There is no right answer that applies to every farm; it is a question that needs to be discussed with the farm’s nutritionist and agronomist. The answer will likely be different if the alfalfa is at 205 RFV versus 175. It may also be different based on the moisture and temperature outlook in a 7-day weather forecast. The yield x quality relationship, or perhaps dilemma, remains alive and well.

6. The Pacesetter

First-cut timing sets the pace for the rest of the growing season. In other words, the first-cut harvest date may dictate how many future cuttings will be taken, the interval between those cuttings, and/or how late into the fall the last cutting will be harvested. First-cutting is the only one of the year when there is no number of days since the previous harvest. The decision options are wide open, but the consequences of the decision impact the rest of the season. Forage quality and yield considerations aside, an earlier initial harvest date often provides for a better utilization of available soil moisture for the second-cutting and expands harvest options for the remainder of the season. An early-cut decision may also be more detrimental to a stand that was stressed the previous year or during the winter.

7. The Longest Wait

Finally, after suffering through seven months of snow, mud, reading blogs, and putting on long underwear, it’s time to make hay. This is perhaps the best unique thing about first-cutting.